[Spark] SPARK 개념 정리

SPARK 개념 정리

- Glossary

- Spark Cluster Mode Overview

- Spark-Submit 실행순서

- YARN에서의 실행

- YARN Cluster Mode

- YARN Client Mode

- Deployment Mode Summary

Glossary

| Term | Meaning |

|---|---|

| Application | User program built on Spark. Consists of a driver program and executors on the cluster. |

| Application jar | A jar containing the user's Spark application. In some cases users will want to create an "uber jar" containing their application along with its dependencies. The user's jar should never include Hadoop or Spark libraries, however, these will be added at runtime. |

| Driver program | The process running the main() function of the application and creating the SparkContext |

| Cluster manager | An external service for acquiring resources on the cluster (e.g. standalone manager, Mesos, YARN, Kubernetes) |

| Deploy mode | Distinguishes where the driver process runs. In "cluster" mode, the framework launches the driver inside of the cluster. In "client" mode, the submitter launches the driver outside of the cluster. |

| Worker node | Any node that can run application code in the cluster |

| Executor | A process launched for an application on a worker node, that runs tasks and keeps data in memory or disk storage across them. Each application has its own executors. |

| Task | A unit of work that will be sent to one executor |

| Job | A parallel computation consisting of multiple tasks that gets spawned in response to a Spark action

(e.g. save, collect); you'll see this term used in the driver's logs. |

| Stage | Each job gets divided into smaller sets of tasks called stages that depend on each other (similar to the map and reduce stages in MapReduce); you'll see this term used in the driver's logs. |

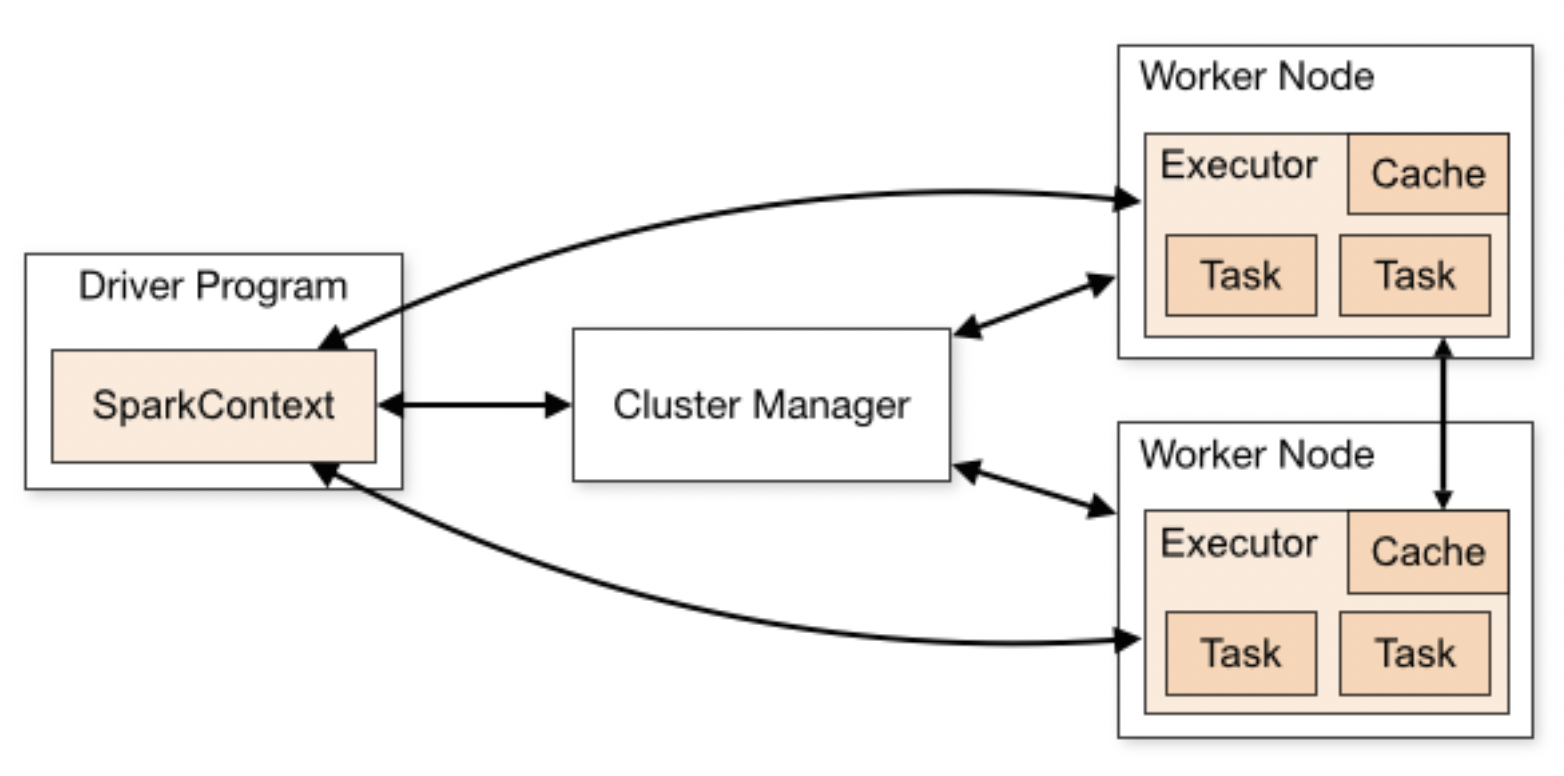

Spark Cluster Mode Overview

- Spark applications run as independent sets of processes on a cluster, coordinated by the SparkContext object in your main program (called the driver program)

- Specifically, to run on a cluster, the SparkContext can connect to several types of cluster managers(Standalone, Mesos, YARN or Kubernetes), which allocate resources across applications

- Once connected, Spark acquires executors on nodes in the cluster, which are processes that run computations and store data for your application

- Next, it sends your application code (defined by JAR or Python files passed to SparkContext) to the executors

- Finally, SparkContext sends tasks to the executors to run

Spark-Submit 실행순서

- 1) $SPARK_HOME/bin/spark-submit을 이용하여 Application 제출

- 2) Driver Program이 실행되며(main()함수 실행) & SparkContext가 생성(즉, Spark Cluster와의 연결이 이루어짐)

- 3) Driver가 Cluster Manager(Standalone, Mesos, YARN, Kubernetes)에게 Executor 리소스 요청

- 4) Cluster Manager가 Worker (Node)에게 Executor 띄우도록 명령

- 5) Driver Program이 DAG Scheduling을 통해 작업을 Task 단위로 분할하여 Executor에게 할당

- 6) Executor가 여러 스레드에서 Task들을 실행한 후 결과를 Driver Program에게 보냄

- 7) Application이 완료되면서 리소스를 Cluster Manager에게 반환

YARN에서의 실행

- When Spark applications run on a YARN cluster manager, resource management, scheduling, and security are controlled by YARN.

- In YARN, each application instance has an ApplicationMaster process, which is the first container started for that application.

- The application is responsible for requesting resources from the ResourceManager.

- Once the resources are allocated, the application instructs NodeManagers to start containers on its behalf.

- ApplicationMasters eliminate the need for an active client: the process starting the application can terminate, and coordination continues from a process managed by YARN running on the cluster.

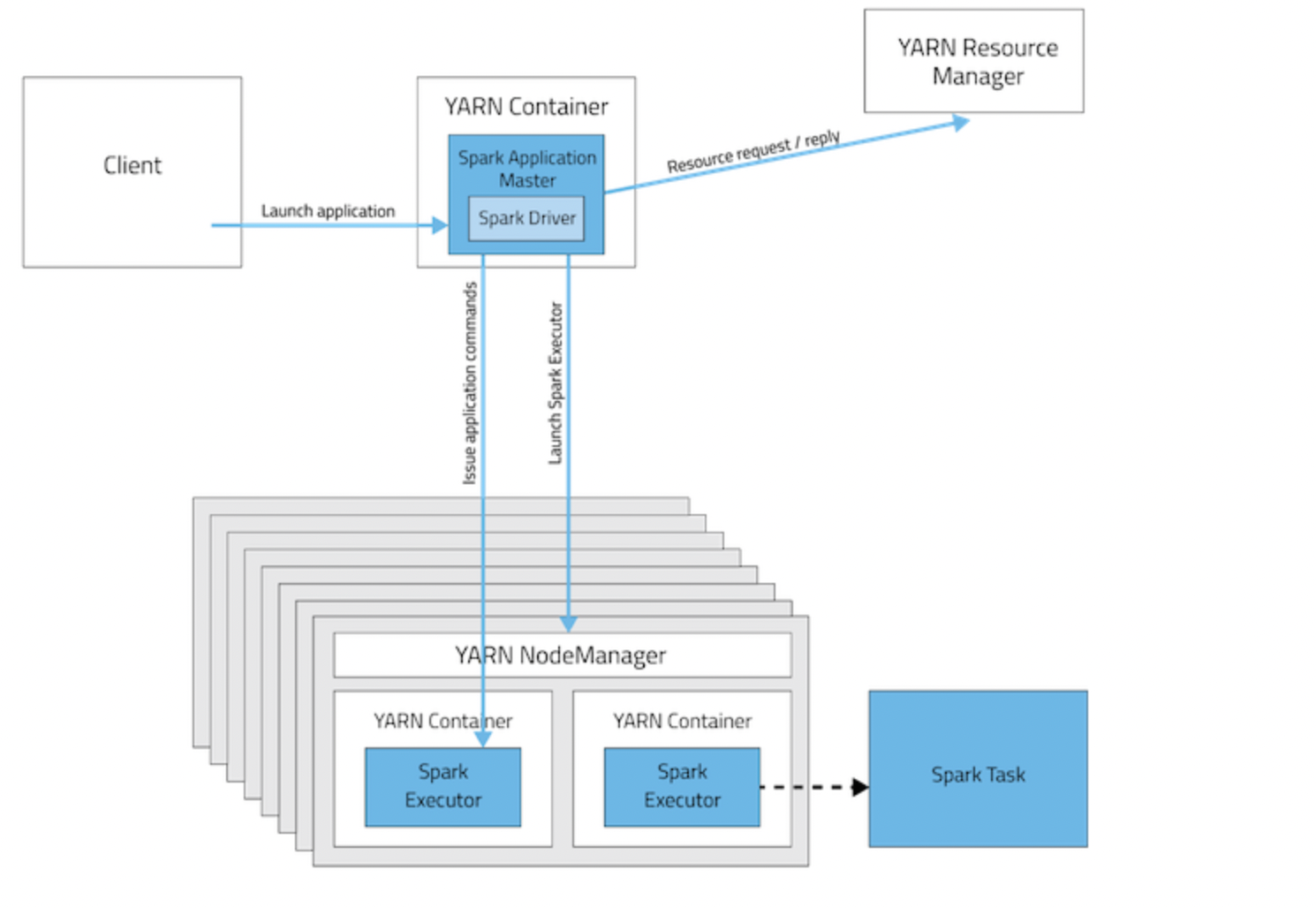

YARN Cluster Mode

- Spark driver runs in the ApplicationMaster on a cluster host.

- A single process in a YARN container is responsible for both driving the application and requesting resources from YARN.

- The client that launches the application does not need to run for the lifetime of the application.

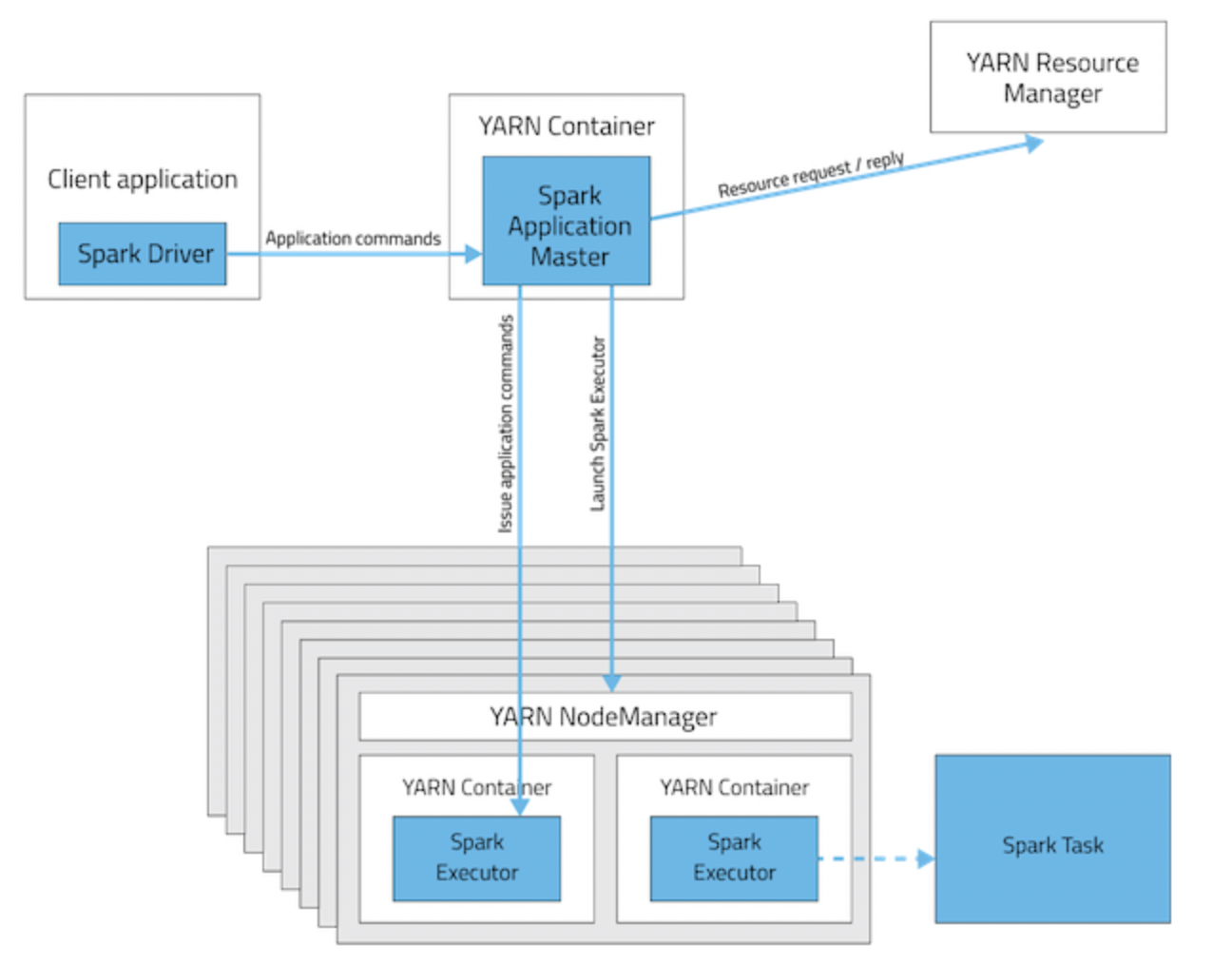

YARN Client Mode

- Spark driver runs on the host where the job is submitted

- The ApplicationMaster is responsible only for requesting executor containers from YARN

- After the containers start, the client communicates with the containers to schedule work

Deployment Mode Summary

| Mode | YARN Cluster Mode | YARN Client Mode | Spark Standalone |

|---|---|---|---|

| Driver runs in | ApplicationMaster | Client | Client |

| Requests resources | ApplicationMaster | ApplicationMaster | Client |

| Starts executor processes | YARN NodeManager | YARN NodeManager | Spark Workers(Slaves) |

| Persistent services | YARN ResourceManager and NodeManagers | YARN ResourceManager and NodeManagers | Spark Masters & Workers |

| Supports Spark Shell | No | Yes | Yes |